First of a three-part series to commemorate the 50th anniversary of the Moon landings.

This year, July 20 will mark the 50th anniversary of the 1969 Apollo 11 Moon landing.1,2 Between 1958 and 1965, both Russian and American space programs attempted a total of over 36 unmanned Moon missions.3 Of the 6 that succeeded, none were survivable soft landings. Just crashing into the Moon proved exceedingly difficult. Developing a guidance, navigation, and control (GNC) system for manned Apollo missions4,9 was an enormous challenge. At its heart was a revolutionary new computer: the Apollo Guidance Computer (AGC).5,6,7

In commemoration of that historical achievement, this is the first of three articles about the AGC. Part 1 describes the hardware. Part 2 describes the software and part 3 its application in Moon missions. In the years since its development, jargon may have changed, but the HPC community will recognize many common themes such as flops/watt power constraints, checkpoint and restart strategies, and the need for performance portability.

The AGC architecture: A giant leap in FLOPSa per W, Kg, m3

Apollo needed a computer orders of magnitude better than those typical of the era:36 lower power, lighter weight, smaller size, greater reliability, and able to operate in the extreme environmental conditions of space flight. In mid-1961, NASA accorded MIT/Draper Labs “sole source” status to design the AGC and soon after selected Raytheon to manufacture it.7 Both organizations had been involved in development of the Polaris missile GNC system.17

The AGC (left) with its Display and Keyboard Interface (DSKY - right). Image Source: NASA

The AGC (left) with its Display and Keyboard Interface (DSKY - right). Image Source: NASA

The AGC was the first computer to use integrated circuits. It was constructed entirely from dual-packaged, 3-input NOR gate flat-packs produced by Fairchild Semiconductor18 in an area that would eventually become known as Silicon Valley.25,26 At its peak, the effort consumed over 60% of all integrated circuits produced in the country. The AGC used a total of 5,600 NOR gates, operated at 1.024 MHz with a 16-bit word, and had 34 basic instructions each micro-coded into a 12-step sequence. It had 4 central registers plus 15 special-purpose registers.16 The table below compares key AGC performance metrics with an early model of the IBM 360. Both systems were released in 1966, the same year initial designs of the first massively parallel computer, ILLIAC IV,22 were completed. Costing nearly $5 billion to develop, 20% of the entire Apollo budget, IBM's System 360 was a big gamble and even bigger success.30 In the table below, we also include a row for comparison with IBM’s newest AC92227 based systems (Summit20 / Sierra24).

| System | Kb | Flops (F) | (Watts) F/W | (Kg) F/Kg | (m3) F/m3 |

|---|---|---|---|---|---|

| AGC Block II12 | 76 | 14,245 | (55) 259.0 | (32) 445 | (00.03) 50000 |

| IBM 360-2010,11 | 32 | 3,011 | (5000) 0.6 | (600) 5 | (30.00) 100 |

| IBM AC922 (Summit20,21,24) |

1E12 | 14E16 | (97E5) 14E9 | (31E4) 45E10 | (930) 15E13 |

aFlop = single-precision multiply + add

Rope core: A new type of nonvolatile memory

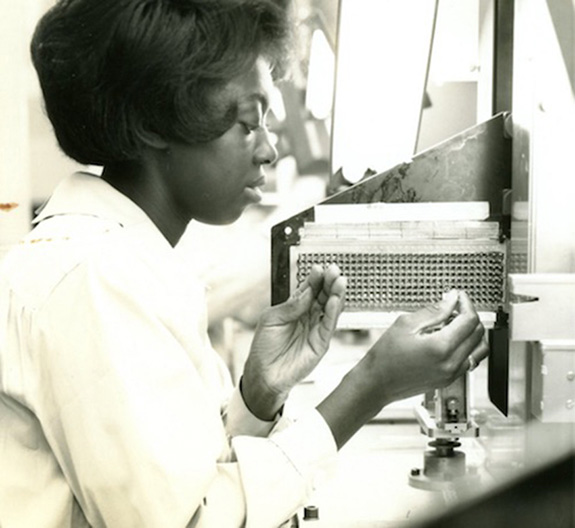

The AGC utilized two types of core memory14: erasable memory (2K words) using coincident current cores and fixed (read-only) memory (36K words) using rope cores,15,29 technology specifically designed for and unique to the AGC. Both were nonvolatile, providing extra protection against data loss during faults. The advantages of rope core were superlative robustness and significantly higher density because a single core was used to store 24 bits.5 On the other hand, rope core took weeks of painstaking labor to hand-weave13 thin wires through (logical ‘1’) or around (logical ‘0’) arrays of cores. Bugs were costly to correct and often were just worked around with additional steps in astronaut checklists or even by revising mission parameters. Unable to fully automate this crucial manufacturing step, Raytheon instead hired an army of experienced textile workers from the New England area, all women. Remarkably, weaving and its place in computing date back more than 150 years earlier to the Jacquard loom.23,28 Present day operating system terms such as core dump or core image are derived from this early memory technology.

A worker weaves copper wires through an array of cores for the AGC (Photo courtesy of Raytheon Company)

A worker weaves copper wires through an array of cores for the AGC (Photo courtesy of Raytheon Company)

The Executive: An operating system with checkpoint/restart services

The AGC used a priority-driven, collaborative, multitasking operating system called the Executive.5 Priority-based job scheduling was revolutionary for its time. The Executive could detect a variety of hardware and software faults and had restart utilities to recover. But, only the most critical programs were restart protected.19 This involved careful design with periodic updates of waypoints (redundant copies of essential state throughout program execution). Restart support consumed resources and complicated testing. In 1968, an internal NASA report19 raised significant doubts about its value. In Part 3 of this series, we’ll describe why it would later be proven invaluable during the Apollo 11 landing.

The Interpreter: A domain-specific language

The Executive and other system functions were all implemented in AGC native assembly code31. However, solving complex, 3D spatial navigation problems with this simple instruction set was tedious, error prone, and memory consuming. Early on, engineers designed a higher-level language, called the Interpreter,5 to support the complex software required for GNC operations. Operands were scalar, vector, and matrix data types in single, double, and even triple precision. Instructions included vector and matrix arithmetic functions, transcendental functions, float normalization functions and other miscellaneous control-flow functions. Similar to p-code,33 it was still a form of assembly language. But, it operated at a much higher level of abstraction—easing development, improving overall reliability and, most importantly, reducing memory usage.

Multiple spacecraft configurations: A performance portability challenge

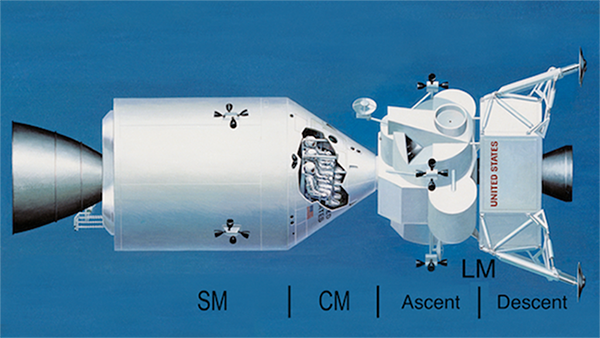

Apollo wasn't just a single spacecraft. It was two: the Command and Service Module (CSM) and the Lunar Module (LM). Each had its own AGC and was further divided into two stages. Depending on the phase of a mission1, the vehicles were joined together in various configurations with dramatically different operating characteristics. Developing a single program, the Digital Auto Pilot (DAP),8 to provide effective GNC for any configuration, even off-nominal cases, presented what amounts to a significant performance portability problem. In Part 2 of this series we'll discuss how software developers met this challenge.

Early NASA artist's rendition of Apollo Spacecraft. Command and Service Module (left) Lunar Module Ascent and Descent Stages (right)

Early NASA artist's rendition of Apollo Spacecraft. Command and Service Module (left) Lunar Module Ascent and Descent Stages (right)

MTBF of 40,000 hours: Extreme computing reliability

The AGC may not have been extreme in scale, but it was extreme in reliability. Of the 42 Block II systems delivered and an aggregate of 11,000 hours of vibration exposure and thermal cycling plus 32,500 hours of normal operation, only 4 hardware faults were observed,12 none of which occurred in actual Moon missions. A conservative estimate of the mean time between failures (MTBF) of the AGC was later calculated to be over 40,000 hours, more than an order of magnitude better than machines typical of that era. Little did AGC hardware engineers know that writing the software would present even greater challenges, ultimately becoming the rate-determining factor in delivering flight-ready units.

Because autonomous guidance was so critical for Apollo, NASA funded the development of this revolutionary new computer to support it.34,35 Half a century later, because self-driving is so critical in the auto industry, Tesla has developed their own custom, proprietary AI chip to support it.32

Author bio

Mark C. Miller is a computer scientist supporting the WSC program at LLNL since 1995. Among other things, he contributes to VisIt, Silo, HDF5 and IDEAS-ECP.

References

- 1Apollo flight plan diagram created by NASA in 1967 to illustrate the flight path and key mission events for the upcoming Apollo missions to the Moon. To allow our readers to explore the image in more detail we include a link to the full-res image here.

- 2Overview of Apollo 11 Mission

- 3List of Moon missions since 1958

- 4What is Primary Guidance, Navigation and Control

- 5Whole book: 'Apollo Guidance Computer Architecture and Operation'

- 6Overview of AGC Architecture

- 7YouTube Video of Rope Core Manufacture

-

8Computer-Controlled Steering of the Apollo Spacecraft

Martin FH, Battin RH. 1968. Computer-Controlled Steering of the Apollo Spacecraft. J Spacecraft. 5(4):400-7 - 9MIT Technical Report on Apollo Guidance and Navigation

- 10IBM 360/20 Specs

- 11IBM 360 Detailed Installation Manual

- 12Reliability History of the AGC

- 13Weaving Rope Core Memory

- 14Description of Core Memory

- 15Description of Rope Core Memory

- 16Amazingly Detailed Presentation on Architecture and Operation of the AGC

- 17Polaris Guidance System

- 18Historical Note about Fairchild Semiconductor

- 19AGC Restart System Design

- 20ORNL Launch of Summit

- 21Top Green 500 List, November 2018

- 22Description of Illiac IV

- 23Jacquard Loom & Computing

- 24LLNL Description of Sierra

- 25Historical Impact of AGC on Computing Technology

- 26Historical Impact of AGC on Chip Manufacture

- 27Description of IBM AC922 Systems

- 28Illustrated History of Computers

- 29Rope Memory Description

- 30IBM $5B Gamble with System 360

- 31AGC Assembly Language Manual

- 32Tesla's new AI Chip

- 33Example of a P-code language

- 34NASA archive on Computers in Spaceflight

- 35The need for an on-board computer

- 36Computer History Timeline